The Effective Way To Ensure A/B Tests and Experiments Yield Insightful Results

Regardless of whether you consider your latest A/B Testing a success or not, there are always results to be measured. There are many failed experiments throughout history in different fields ranging from science to religion, or technology. Sometimes failed experiments also lead to insights that can shift fundamental understandings. In order to establish foolproof A/B […]

Regardless of whether you consider your latest A/B Testing a success or not, there are always results to be measured. There are many failed experiments throughout history in different fields ranging from science to religion, or technology. Sometimes failed experiments also lead to insights that can shift fundamental understandings.

In order to establish foolproof A/B Testing that yields great results, there are some rules we could frame our methodology into.

Seven steps for efficient A/B testing

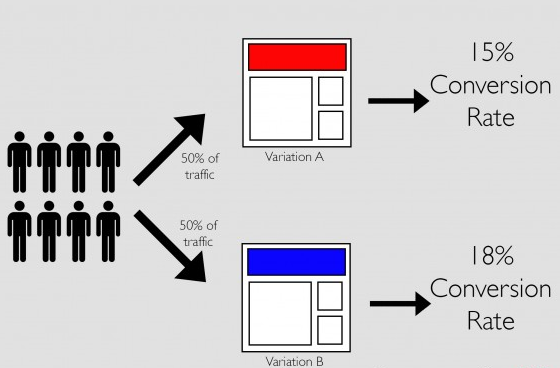

More often than not general A/B tests come with KPIs. For example, if you are running an e-commerce website and you want to optimise the conversion events down the funnel by increasing the add-to-cart events, you would need to establish clear results on which variation of your website would drive better results. Therefore, you get to test a few options and determine the best performer based on data. Generally, if your A/B tests here fail to deliver better results than the status quo, you consider the test a failure and go again until you improve.

Sometimes A/B tests fail. What could we learn from them? By embracing a more scientific approach to our tests, we would not only perform better tests in the future but also that would improve our imagination of what not to do next time.

Regardless of the field, you are testing, when you develop and follow a certain methodology, that greatly increases the chances of your test results outcome being positive. Start by following a few of the rules listed below, as well as implementing some rules of your own.

#1 Create a central question

The central question around your test should be driving your entire experiment environment. If your hypothesis is that your website needs fewer forms, the question should be which forms to remove. The next step is to start testing. Another great example is, would a discount be beneficial for your business if you offer it to new users? These types of questions are often hard to answer without data and need to be tested.

#2 Hypothesise on your central question

You know your business best. Use all the information you already have before trying to make conclusions out of thin air. If you have previously tested or implemented relevant campaigns in the past like the one you are testing now, study the data and expect a connection. If you’ve aired a discount last year on a similar item with a similar target audience, expect similar results. If there have been any issues with the former test, expect them here, and make sure to avoid repeating the same mistakes.

If like in the above example, your hypothesis is that the hero section or the main banner of the landing needs to be reworked, you would later need to compare results. Don’t change anything else and test one thing at a time, in order to get the most accurate results.

Another important thing to note here is that traffic comes from different sources. Sometimes your traffic is cold, sometimes it’s warm or hot. All of these respond differently during the experiment and might skewer the results in some favour. Limit your test variables to the ones that are specifically relevant in your experiments.

How does a hypothesis look like?

If your central question is “Would a discount work for my business?” a solid hypothesis might look like “Putting a 5% discount on our Hero product for new visitors, could potentially increase the revenue per user and lead to more conversions”. Later on, you proceed to confirm your hypothesis by analysing the gathered data, or reject it and form a new one.

#3 Always know what are the variable parameters that you are testing

Make sure to always use variables that are easy to determine throughout the test. For example, if you are juggling with discount values, you should know whether a 25 per cent discount would give you a higher conversion rate than say 15 per cent. If it does, great, but if the conversion is the same, you should aim to save from a lesser discount value. Try out different discount values variations within a single test and determine the best by looking at the gathered data. Sometimes you shouldn’t decrease the percentage, and instead, increase it. Experiment to get the best option for your product.

In most A/B tests you would be changing independent variables like discount rates, and you would be monitoring dependent variables like conversions, revenue or profit, depending on what you’ve changed and what your goals are. Discounts impact all of the abovementioned, and since the correlation between the increase in discount does not clearly appear in conversions, we prefer using profit or revenue. (Since you don’t get to know how much you lose by increasing the discount rate when observing conversion percentages only).

#4 Before you start the A/B testing, know what you are measuring

Most A/B tests that fail, do so due to a bad set up, that yields either unreliable or unreadable results instead of what you need. If a certain independent variable impacts more than one dependent, you should devise a plan or at least have an idea whether the decrease of one variable is worth the increase of another.

#5 Let the A/B Testing begin

Sometimes a simple change could increase your conversion rate by one fifth, especially if you’ve forgotten to battle one of the major objections of your visitors by sharing a fundamental benefit of your product.

After you’ve successfully nailed down the question you are testing, you’ve devised some hypothesis and you are well aware of how the dependent and independent variables interact with each other, and you’ve also made up a plan on how to measure things, then you can run the experiment.

Once you do so, allow at least one month to pass, and ensure that you acquire statistically significant results before you draw any conclusions. For example, if you’ve tested 10 subjects and 3 of them convert, that doesn’t mean you have a 30 per cent conversion rate since the quote isn’t statistically significant. Gather as many results as possible before analysing.

Sometimes, you get to experiment with different audiences. Moreover, cold, hot and warm traffic react differently to different stimulus. Know your audience. From time to time, you could perform niche A/B Testing, by targeting separate audiences for the tests. Although these might take a lot longer for you to reach statistically significant results, they are often worth it.

#6 Results Analysis time

Contrary to popular belief, A/B testing’s only purpose isn’t to increase your revenue and conversion rates. Acquiring knowledge and learning lies in the essence of all experiments, e-commerce based, or not.

Whatever you learn, you can always intuitively and empirically apply it in future campaigns, website designs, advertisements, etc.

When looking at past tests, check whether the uplift was negative or positive on separate occasions and go back to the hypothesis that was being tested in the first place and determine whether it was the right one and whether it was confirmed or rejected by the results.

In order to be certain that you are on the right path with analysing the results, make sure you can answer all of these questions with the data gathered:

- Have any unexpected variables emerged thanks to the results at the end of the testing?

- Did all of your tested audiences respond in a similar way? If not, which have responded in a different way. Can you figure out why? By doing so, you can actually get to know your audience. Remember, you aren’t only testing your website/product, but you are also studying your audiences.

- Have you missed analysing how the independent variables that you adjusted, reflect on other dependent variables aside from the primary tested one?

- How would a different degree of the changed independent variable change the outcome of the test? For example, if you further enhanced the saturation of the colour you are testing, would that increase conversion even higher? Test it.

Once you can answer these questions, it is time to optimize your website, product or campaign.

#7 Optimize according to the results

Since you’ve got the results, you can now optimize your website after analyzing them thoroughly. If your hypothesis was confirmed and the test was successful, implement the changes. If not, continue with another test.

All the insights and data you gathered thanks to the audiences that were included in the test, you can use for further studies or set up additional experiments in the future.

Taking the maximum out of every A/B Experiment

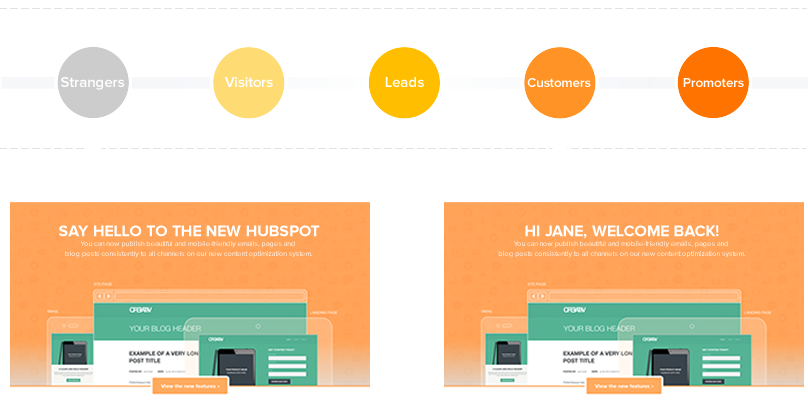

Slowly transforming your one-time visitors to subscribers or loyal customers and then to promoters and brand ambassadors is no easy task. A ton of A/B testing is required, in order to make every little element on the page treat them personally and engage them.

If you’ve noticed that your product greatly resonates with a certain niche audience, establish certain rules that if a visitor is a representative of that audience, they get treated differently. For example, if you are offering a product that can both be used in construction and by civilians to fix stuff at home, if you know the visitor is a professional, you could use more advanced terminology and ensure that they get a good idea of your advanced knowledge.

A big part of A/B tests result in flat tests. You can define a flat test as a test where the results don’t significantly change our performance.

What are the main reasons behind getting a flat test?

- Poor, flat timing.

- Too small or too big audience size for the given test

- Unforeseen variables

- Improper connections between the dependents and the independent variables.

Although, getting flat results is quite a disappointment sometimes, don’t get discouraged, as often flat tests are the stepping stone to even greater results and positive uplifts in the long run.

Big uplifts require big, risky changes, and that can sometimes be frightening because you are also risking opening the door to a negative uplift. Don’t be scared of change, if something worsens, you can always make a backup and get back to where you first started. Without risks, no business can grow.

You can change anything on your website, in order to personalize the experience of a certain niche user segment. Ranging from search bars to logo, navigation, menus, call to actions, colours, images, and the overall message, you can practically change everything.

Many big companies conduct A/B testing, and if the test results in a flat, they reconsider the audiences. For example, some audiences might react positively to a test, where others reacted negatively or were indifferent.

Sometimes a negative test could also yield positive results in an unexpected manner. Imagine a company investing thousands of dollars into videos, and images of their products to constantly update the content on their website. Then, they run an A/B test to determine whether that yields any positive results. If the results is a slight increase in revenue, not enough to surpass the cost of investment, then the test is negative.

But then, they would know that they could better optimize the budget, and no longer allocate funds to photography and instead focus on something else. This way, the A/B testing yields worthy results, but in another form.

Final Words

Sometimes you will get a flat test, it’s inevitable. Failed tests should give you the insight to reassess the already established assumptions you had in the beginning. Maybe your hypothesis is wrong? The closer your get to reality, the smarter business decisions you would be able to make.

Moreover, from time to time, you conduct the right A/B test with the right dependent and independent variables, but then you get the audience wrong. Test audiences, switch things up, get to know your visitors and give them the best and the most personalized experience.